Did you know that sensitive chat you “deleted” from ChatGPT might still be on an OpenAI server, waiting to be reviewed?

You probably treat ChatGPT like a private notebook. Maybe you can paste the company code into it. Or you draft a sensitive email or share a personal problem. You assume it’s all confidential. In 2025, that assumption is more than just wrong. It’s dangerous.

Its isn’t just a warning. We will tell you exactly what does ChatGPT track. We have proof that “delete” does not always mean “deleted,” especially because of new 2025 legal holds. Most important, we will give you simple, step-by-step instructions to lock down your ChatGPT privacy settings today.

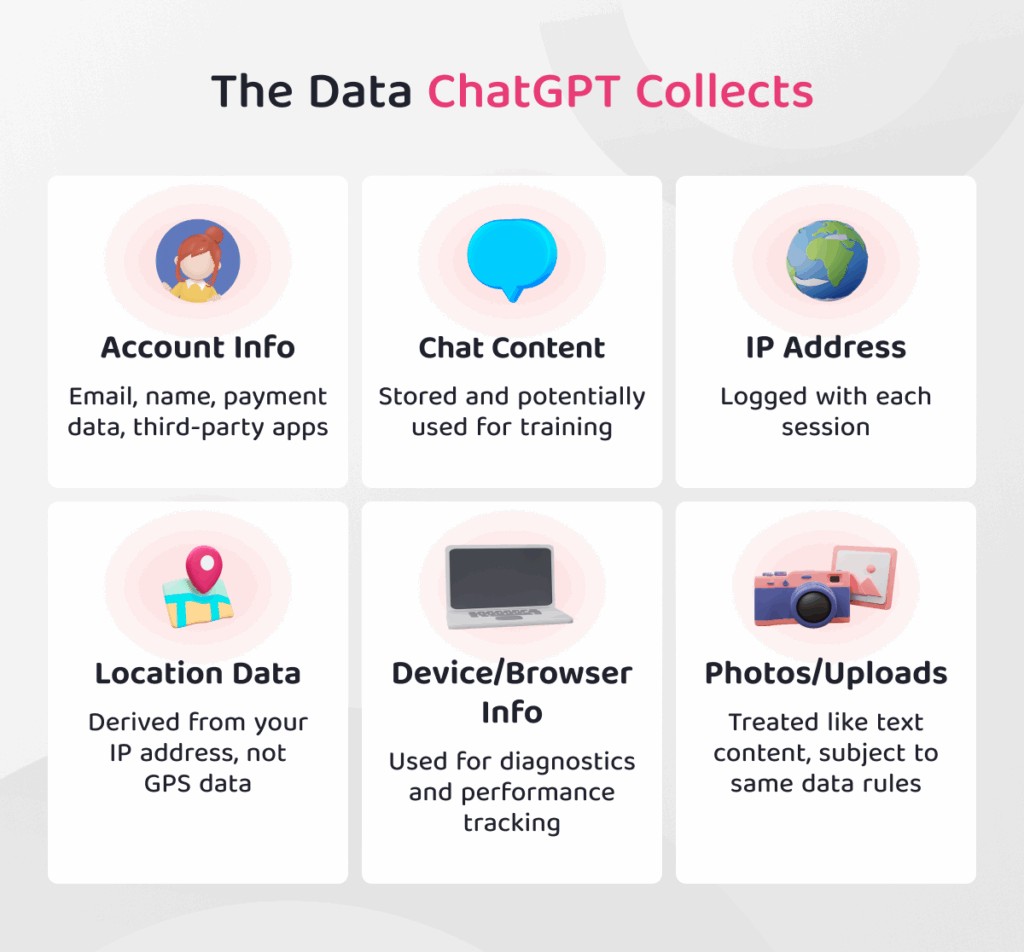

#1. First, What Does ChatGPT Track? The “Default” You Agreed To

When you signed up for ChatGPT, you agreed to its privacy policy. That policy lets OpenAI track a lot of your data.

It’s not just the words you type. The system saves your entire conversation. This includes all your prompts, all of ChatGPT’s answers, and any files you upload.

It Collects Technical Data

This is info like your IP address, which shows your general location. It logs what device you use (like an iPhone or a Windows laptop) and your browser. It even records how you use the service, like when you log in and for how long.

Your Account Information

Then there is your account information. OpenAI has your name, email, and phone number. If you pay for ChatGPT Plus, it also has your payment details.

Think about what this means. Did you paste a private work memo? OpenAI saves it. Did you ask for advice on a personal health problem? That’s saved, too. All of this information is tied directly to your account. This data is the key to ChatGPT data training.

#2. Why Your Chats Aren’t Private: Training, Safety, And A 2025 Lawsuit

So, why does OpenAI want all this data? It’s not just to keep your chat history for you. There are three main reasons your ChatGPT privacy is not what you think.

Your Chats Are Used For ChatGPT Data Training

By default, your conversations are fed back into the system to help train future models, like GPT-5. This process is not fully automated. Real human AI trainers can (and do) read your chats to check the AI’s answers and help it improve.

OpenAI Saves Your Chats For Safety

Even if you find the setting to “opt out” of training (we’ll show you how later), there’s a catch. OpenAI still keeps all your conversations for 30 days. They do this to check for anything that breaks their rules, like abuse or illegal content.

#3. The 2025 Lawsuit: The “Delete” Button is Broken

This is the critical proof. You may have heard about big lawsuits against AI companies. Because of the ongoing The New York Times v. OpenAI case, a judge issued a court order in June 2025.

This order forces OpenAI to save all chat data. This includes chats you thought you “deleted.” The company must hold onto this data until the lawsuit is over.

This means the “Confirm clear conversations” button does not do what you think it does. As of 2025, your data is being held under a legal rule. Your old ideas about ChatGPT privacy are wrong. That data is not gone.

#4. How to Make ChatGPT Private: 4 Actionable Steps for 2025

You can’t change the past chats, but you can protect your future ones. You have a few options. Here is how to make ChatGPT private, starting with the easiest and most important step.

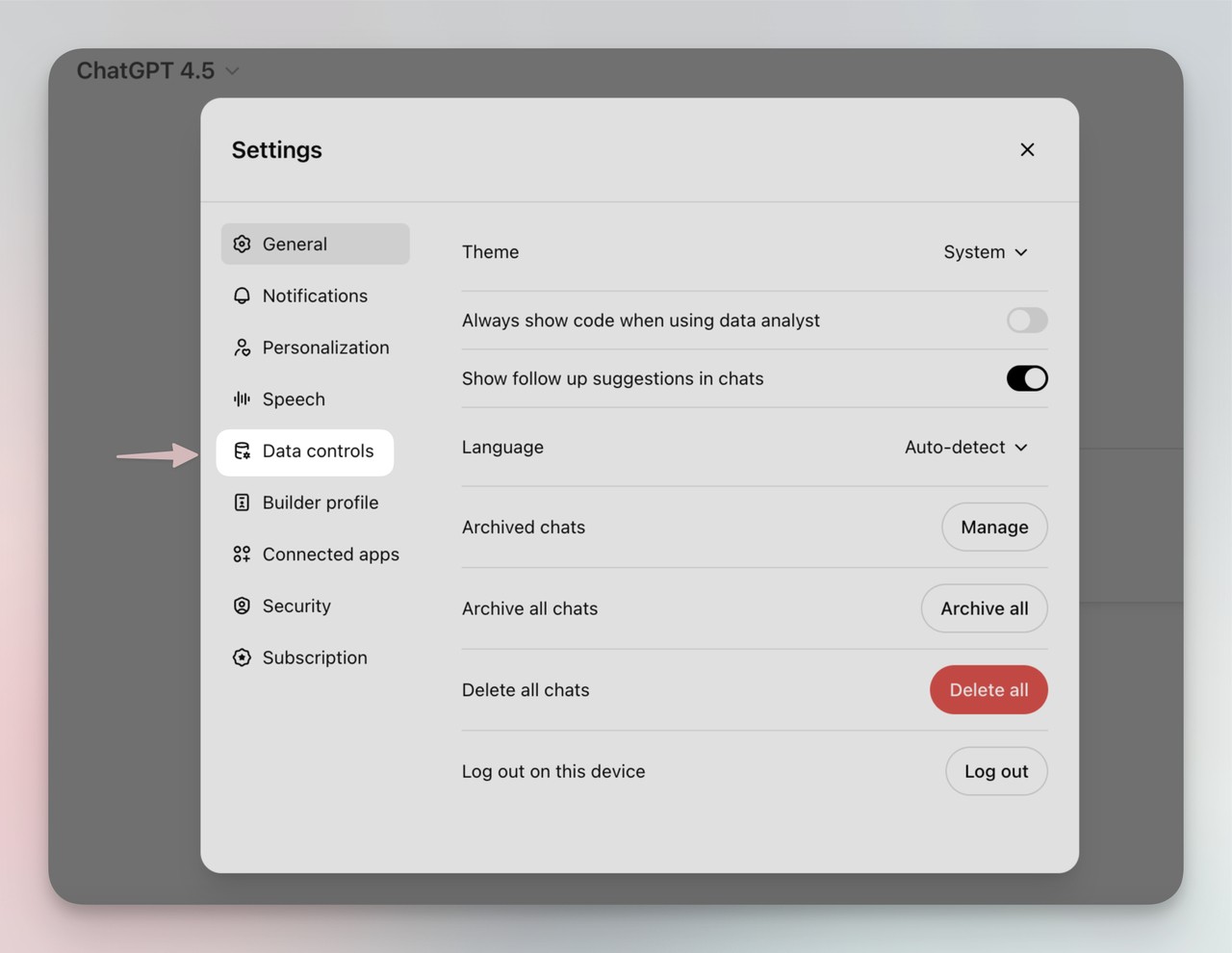

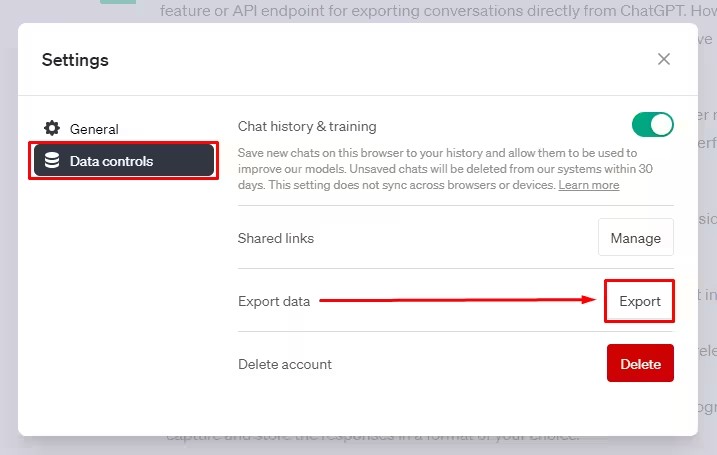

Turn Off “Chat History & Training” (The Basic Step)

This is the single most important setting for all users. It tells OpenAI to stop using your new chats for training. It also stops new chats from appearing in your sidebar.

Here is the 30-second fix:

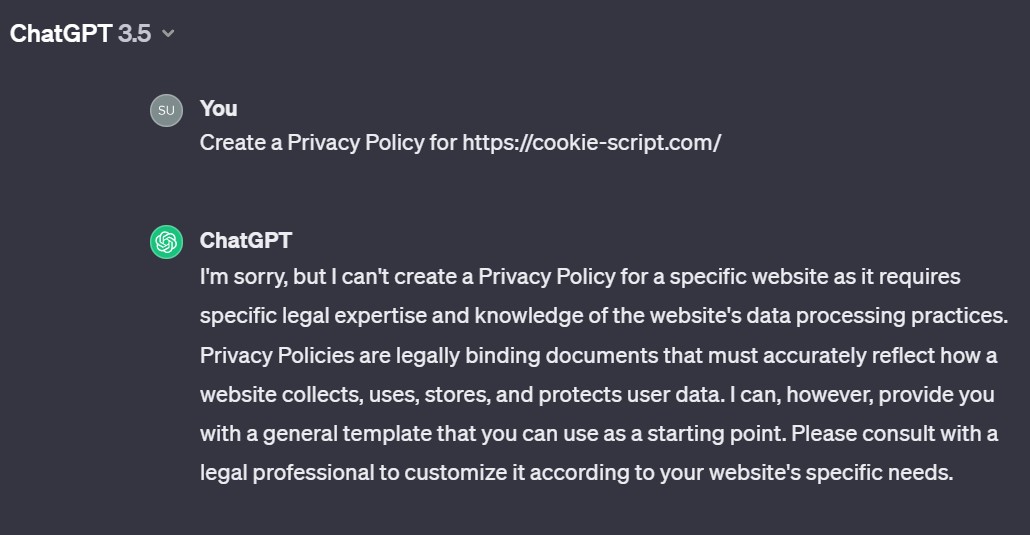

i. Log in to ChatGPT.

ii. Click your name in the bottom-left corner.

iii. Go to Settings.

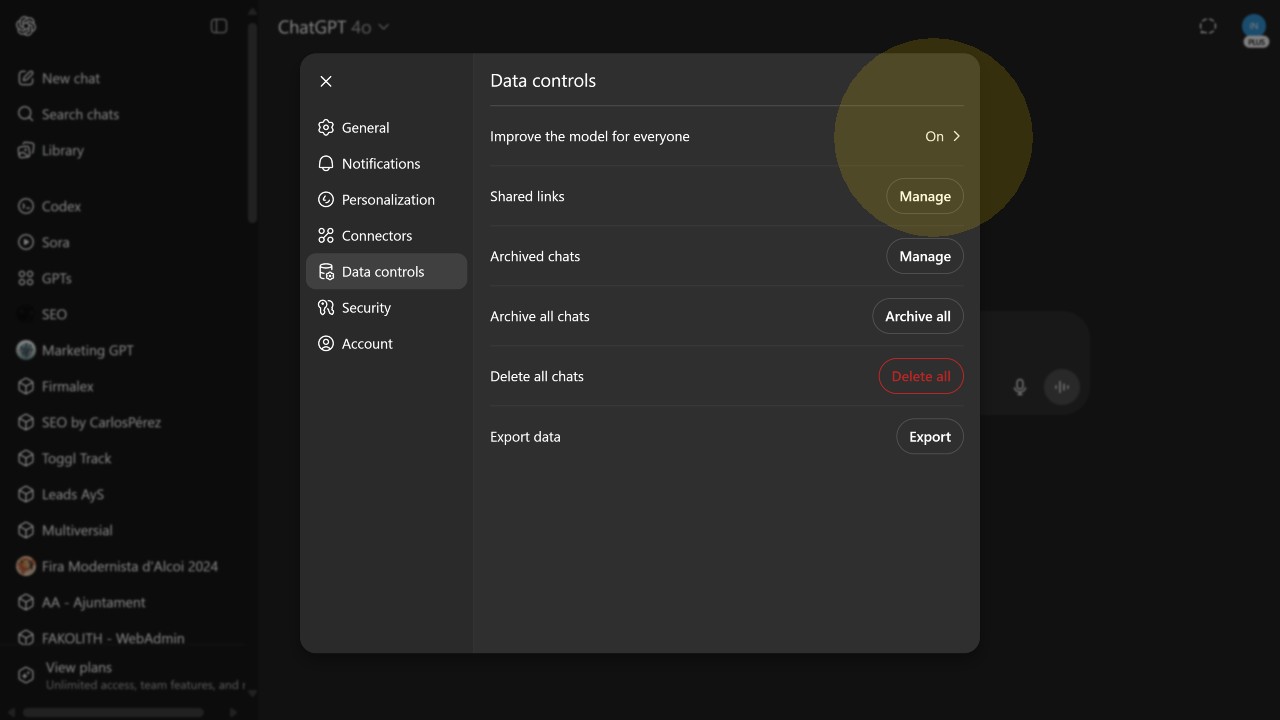

iv. Click on Data Controls.

v. Find “Chat History & Training” and turn the toggle OFF.

That’s it. But you need to know what this doesn’t do.

Disabling “Chat History & Training” is the best you can do on a free plan, but it’s not a silver bullet. OpenAI still keeps your chats for 30 days for safety review.

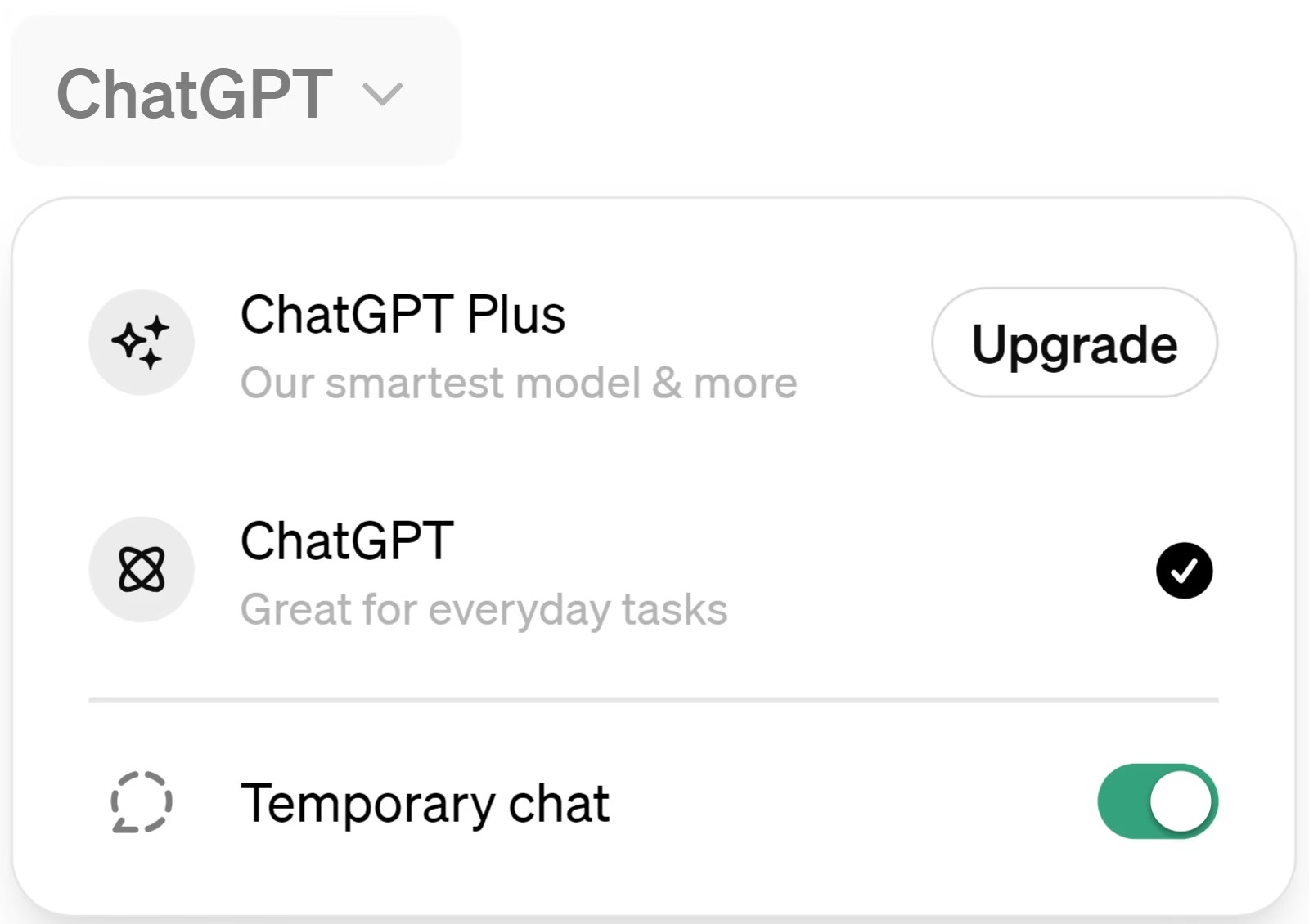

Use “Temporary Chats” (A Better, Per-Chat Solution)

This 2025 feature is a better option for single, sensitive conversations. A Temporary chat is not saved to your history. It is not used for training. And it is auto-deleted after 30 days (unless the legal hold applies).

How to use it:

i. When you start a new chat, look at the top-center (where it says “GPT-3.5” or “GPT-4o”).

ii. Click the model name.

iii. A dropdown menu will appear. Select “Temporary chat”.

This is perfect for when you need to paste a sensitive work document or ask a personal question. Just remember to start it before you type your prompt.

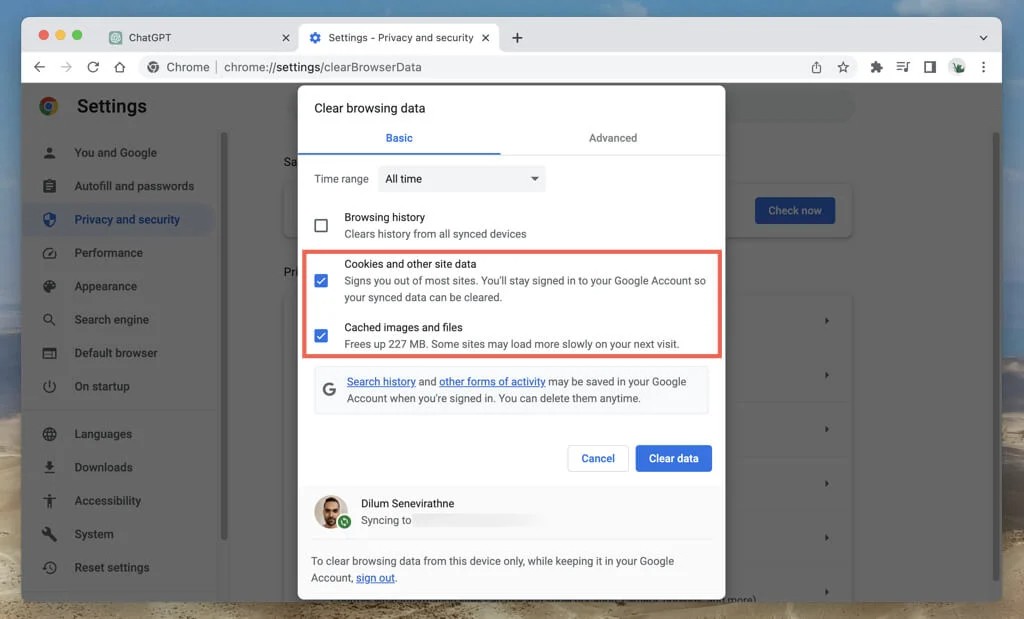

Export and Delete Your Data (The “Nuclear” Option)

If you want everything gone, you have to delete your account. This cannot be undone.

First, go to your Settings and Data Controls. You will find an option to “Export data”. This sends you a file of your chat history.

After you have your data, you can find the “Delete account” button in the same menu. This will erase your account and all its data. But this can take several weeks to fully complete.

The “Business” Solution (The Only Real Privacy)

The only way to have true ChatGPT privacy by default is to pay for a business plan. The ChatGPT Team and Enterprise plans do not use your company’s data for training. Period. This is the solution for any company or professional who wants to use AI without risking their data.

#5. The Real-World Risk: What Happens When AI Chats Leak?

You might be thinking, “So what? My chats are boring.” The risk is not just a theory. A data leak can have serious consequences.

Just look at the Samsung data leak in 2024. Employees at the company were using ChatGPT to help with their work. They accidentally pasted secret information into their chats. This included proprietary source code and notes from private company meetings.

That sensitive data was then saved on OpenAI’s servers. It became part of the ChatGPT privacy problem, exposed to potential human review or a future breach.

For Samsung, it was the source code. For you, it could be your company’s financial data. It could be a private client list. Or it could be a personal secret you thought was safe. This is why managing your settings matters.